At what point in the future do we evaluate program success?

This blog was orginally published by Feedback Labs on July 1, 2015. Click here to read it at Feedbacklabs.org.

We want to know if our social programs are working. But when, really, do we know? And why do we often make the implicit assumption that positive change is linear across time?

In 1994, the US Department of Housing and Urban Development (HUD) launched Moving to Opportunity (MTO), a social experiment designed to evaluate how neighborhoods affect the social and economic outcomes of disadvantaged families in the United States. The study randomized public-housing residents in high-poverty neighborhoods to test whether offering housing vouchers would help improve their lives by allowing them to move to low-poverty neighborhoods.

Major studies of the program were conducted at the following time intervals after the MTO program began: 2 years, 4 to 7 years, 5 years on average, 10 to 15 years, and 15 to 18 years.

Initial evidence from the RCT was disappointing, finding that the program had little or zero improvement. It contradicted theory and non-experimental evidence of key sociologists that study neighborhood effects. But the newest paper that has just been released (May 2015) shows that treatment effects are, in fact, substantial.

One of the many difficulties with randomized control trials (RCTs) in social policy is deciding when surveys are conducted after program implementation. How long does it take for an effect (if any) to be seen?

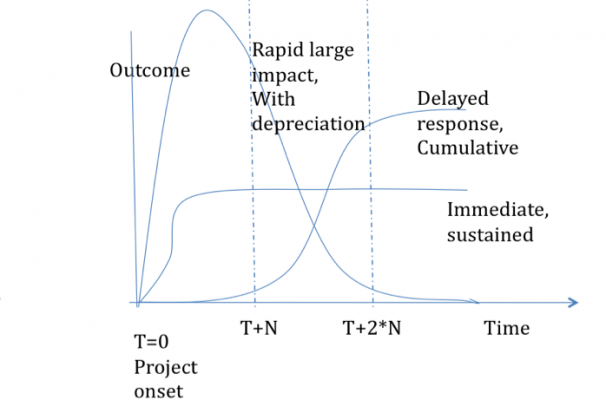

Change is not necessarily temporally linear. Think of an impulse response function in which the impulse is the program intervention (the housing voucher) at the project’s onset (t=0). The outcome of interest (i.e. education, health, or income) could just as likely (maybe even more likely) follow a non-linear path. It could take a while for any discernable impact but then it could suddenly and exponentially grows at year 5. That means the arbitrary decision to evaluate the study at year 4 will show the program to be unsuccessful.

Photo Credit: Lant Pritchett, 2011 (unpublished)

The implications of this do not mean that we should wait 18 years to evaluate programs. Firstly, who knows if the critical juncture for observable change is at year 18 or year 19? It’s arbitrary.

But secondly, 18 years is a long time. So long, in fact, that the newest study measures inter-generational effects.

Michael Woolcock writes extensively of this in a paper that unpacks our understanding of impact trajectories and efficacy. He writes that, “the absence of [the functional form of the impact trajectory] can lead to potentially major Type I and II errors in assessing the efficacy of projects…”. Woolcock explains that there are a few ways to better understand the shape of the trajectory:

- Raw experience

- Solid theory

- Regular collection of empirical evidence

The third option, he argues, is the most defensible and can be done as part of a project’s monitoring procedures. Is it possible for us to perceive project monitoring less as a compliance issue and one of collecting feedback for learning and course-correction?

Do we–as practitioners of social policy– need 18 years just to learn enough to improve a project? Do we need an RCT to “prove” non-experimental evidence? RCTs are interesting for academic papers but we wonder: would it have been possible for participants in the housing voucher program to provide feedback earlier in the program, to let implementers and key decision-makers know what is working, what they like or dislike, and why? And would this “evidence” have been enough (or better) to help us make investment decisions?